# SQL Maintenance – Dedicated maintenance mechanism for large BPS databases: indexes and statistics

Applies to version: 2022.1.3 and above; author: Maciej Kieblesz

Introduction

This article describes a method of maintaining databases to ensure the highest possible performance of the SQL engine, and thus the BPS application itself. In practice, this will mean a reduction in the number of 'timeout' errors, for example, when loading a report. This mechanism is tailored to BPS databases.

Disclaimer:

Webcon is not responsible for the maintenance of customer databases, but it is recommended that such operations be performed on BPS databases once a day outside business hours.

The article uses open source scripts available on the public Internet. These scripts are successfully used by WEBCON in own installations and those of selected clients. However, be sure to test such scripts on dedicated environments before using them in production (recommended procedure for any scripts downloaded from the Internet).

The examples given herein are one of the possible database maintenance procedures; they can be modified depending on the conditions of a specific environment.

Source: chapter 7.1 of the document:

https://community.webcon.com/uploads/editor/dropped/webcon_bps_2021_infrastructure_izFWwC.pdf

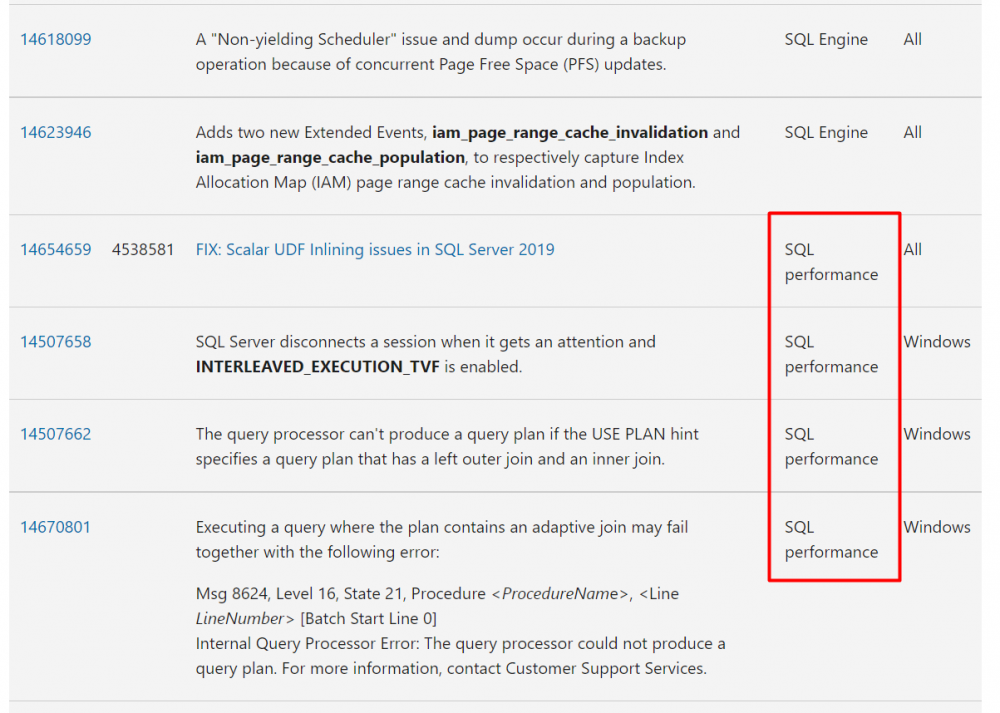

As there are performance improvements to the engine itself, it is also recommended to install the latest Microsoft SQL Server updates.

An example with Cumulative Update 16 for SQL Server 2019:

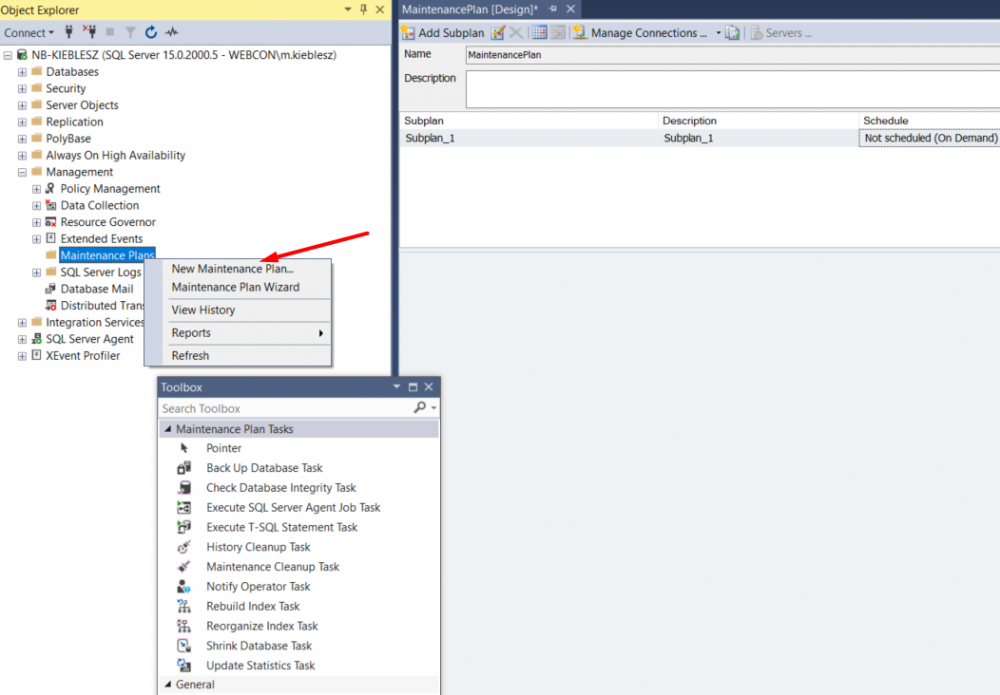

Creating a job

SQL Server Management Studio allows to create a maintenance plan manually from the user interface, from separate tasks available in the Toolbox:

In this case, the job should not be created through the interface; instead use the ready-made open source scripts by Ola Hallengren:

https://ola.hallengren.com/sql-server-index-and-statistics-maintenance.html

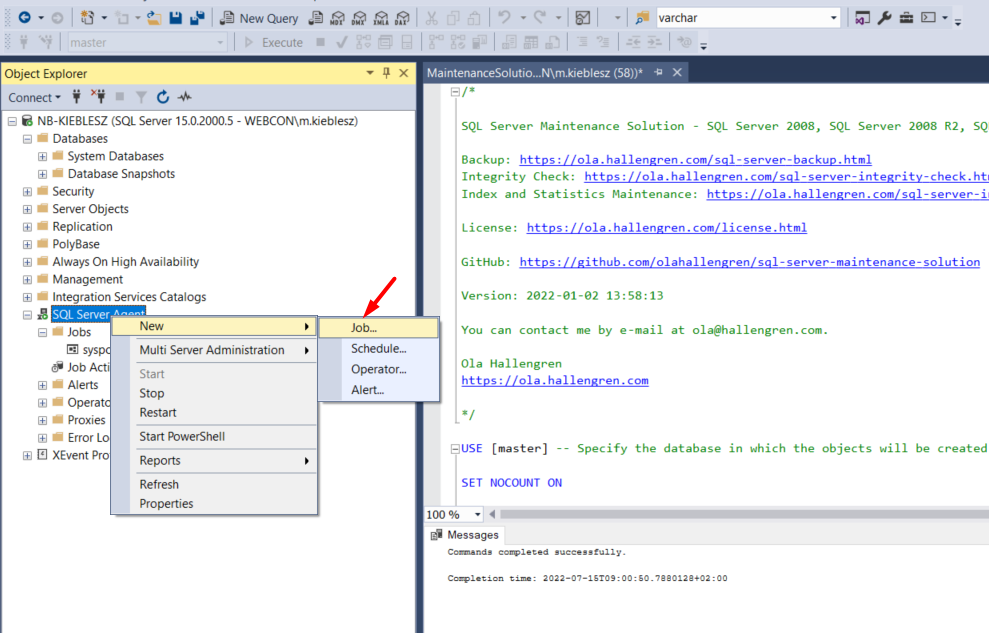

The MaintenanceSolution.sql script is available in the Download section. Download and paste it into the SQL instance in the new query window.

Link to download the script directly:

https://ola.hallengren.com/scripts/MaintenanceSolution.sql

Run the script in the context of the database where the logs of the operations performed will be saved – this can be done by default on the master system database or on a newly created database. In the latter case, create the database beforehand and change the USE [master] command to USE [Name_base] in the script.

Since only performance is considered here, change the @CreateJobs parameter to NULL and run the script by selecting Execute.

The next step is to create a new job, which can be easily done from the interface:

In the General tab, change the name of the job to one that suggests what it does, such as ‘Maintenance_Indexes_Statistics’. It is also possible here to change the account (Owner) in the context of which the job will be performed – preferably it should be the system administrator, e.g. sa.

Step 1

In the Steps tab, select New, enter a name, and paste the following parameters in the Command field:

EXECUTE dbo.IndexOptimize

@Databases = 'ALL_DATABASES',

@FragmentationLow = NULL,

@FragmentationMedium = 'INDEX_REORGANIZE,INDEX_REBUILD_ONLINE,INDEX_REBUILD_OFFLINE',

@FragmentationHigh = 'INDEX_REBUILD_ONLINE,INDEX_REBUILD_OFFLINE',

@FragmentationLevel1 = 15,

@FragmentationLevel2 = 40,

@UpdateStatistics = 'ALL',

@LogToTable = 'Y',

@OnlyModifiedStatistics = 'Y',

@Indexes = 'ALL_INDEXES, -BPS_Content_PROD.dbo.SolrIndexerQueueItems,-BPS_Content_PROD.dbo.SolrActivityQueueItems,-BPS_Content_PROD.dbo.SolrElementQueueItems,-BPS_Content_PROD.dbo.SolrFileQueueItems,-BPS_Content_PROD.dbo.SolrUtilityQueueItems'

Enter a valid BPS_Content database name in the @Indexes parameter.

Press OK to confirm.

If there are more databases within the instance, the parameter @Databases = 'ALL_DATABASES', can be replaced with @Databases = '%BPS%',. Only databases with 'BPS' in the name will be taken into account in that case. This step will reorganize/rebuild indexes and update statistics with default sampling. Reorganization/rebuilding of indexes has less impact on SSDs than HDDs, but the solution is intended to be universal, independent of hardware. Description of all parameters is available on the script author's website.

Step 2

To add a second step, follow the same procedure, but specify different parameters. When completing the @Databases parameter, provide the exact name of the Content BPS database, because operations will be performed only for this particular database. The same database name should also be included in @Indexes, so that it is possible to skip tables for which an UPDATE STATISTICS WITH FULLSCAN operation is not required. Additionally, the statistics have already been updated with the default sampling in the first step. Theoretically, there are more tables, but the UPDATE STATISTICS WITH FULLSCAN operation of these specific ones takes the longest for large BPS installations (skipping these three indexes saves about 30 minutes of job duration for a 1 TB database – the @Indexes parameter is added only to reduce the job execution time).

EXECUTE dbo.IndexOptimize

@Databases = 'BPS_Content_PROD',

@FragmentationLow = NULL,

@FragmentationMedium = NULL,

@FragmentationHigh = NULL,

@UpdateStatistics = 'INDEX',

@LogToTable = 'Y',

@StatisticsSample=100,

@OnlyModifiedStatistics = 'Y',

@Indexes = 'ALL_INDEXES, -BPS_Content_PROD.dbo.WFHistoryElements, -BPS_Content_PROD.dbo.WFHistoryElementDetails, -BPS_Content_PROD.dbo.WFActionExecutions,-BPS_Content_PROD.dbo.HistoryTasks,-BPS_Content_PROD.dbo.SolrIndexerQueueItems,-BPS_Content_PROD.dbo.SolrActivityQueueItems,-BPS_Content_PROD.dbo.SolrElementQueueItems,-BPS_Content_PROD.dbo.SolrFileQueueItems,-BPS_Content_PROD.dbo.SolrUtilityQueueItems'

In the second step, in the Advanced tab, change the On success action: option from Go to the next step to Quit the job reporting success. Without such a change, you will be prompted accordingly when saving the job. After confirmation, the parameter will be automatically altered. This step will run the Update Statistics job with the FULLSCAN option for index statistics, which greatly affects performance.

Step 2 - Modification for SQL engine in newer versions of SQL Server

Starting with SQL Server 2016 SP1 Cumulative Update 4, it is possible to add the option PERSIST_SAMPLE_PERCENT = ON to the statistics update command, which prevents the loss of defined sampling after statistics auto-update.

Implementation of this mechanism will require alterning the IndexOptimize procedure by running the linked script in the context of the database on which the MaintenanceSolution.sql script was run

https://raw.githubusercontent.com/WEBCONAPPS/sql-server-maintenance-solution/master/IndexOptimize.sql

and adding the following parameter to execute Step 2:

@CurrentStatisticsPersistedSample = 'Y',

EXECUTE dbo.IndexOptimize

@Databases = 'BPS_Content_PROD',

@FragmentationLow = NULL,

@FragmentationMedium = NULL,

@FragmentationHigh = NULL,

@UpdateStatistics = 'INDEX',

@LogToTable = 'Y',

@StatisticsSample=100,

@OnlyModifiedStatistics = 'Y',

@CurrentStatisticsPersistedSample = 'Y',

@Indexes = 'ALL_INDEXES, -BPS_Content_PROD.dbo.WFHistoryElements, -BPS_Content_PROD.dbo.WFHistoryElementDetails, -BPS_Content_PROD.dbo.WFActionExecutions,-BPS_Content_PROD.dbo.HistoryTasks,-BPS_Content_PROD.dbo.SolrIndexerQueueItems,-BPS_Content_PROD.dbo.SolrActivityQueueItems,-BPS_Content_PROD.dbo.SolrElementQueueItems,-BPS_Content_PROD.dbo.SolrFileQueueItems,-BPS_Content_PROD.dbo.SolrUtilityQueueItems'

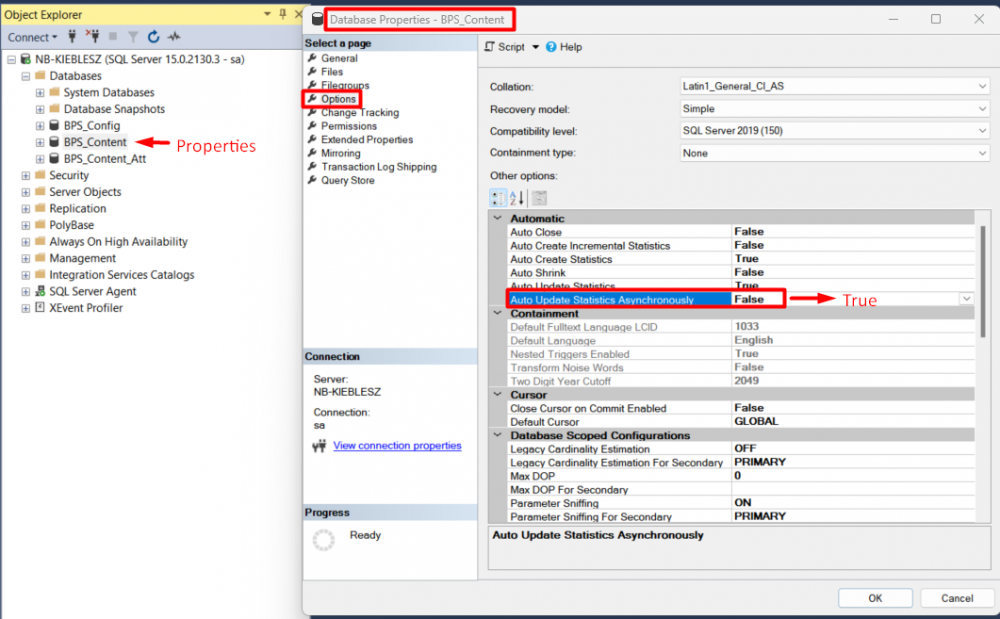

If you use the @CurrentStatisticsPersistedSample = 'Y' parameter, you should also change the auto-update mode of statistics in BPS databases to asynchronous.

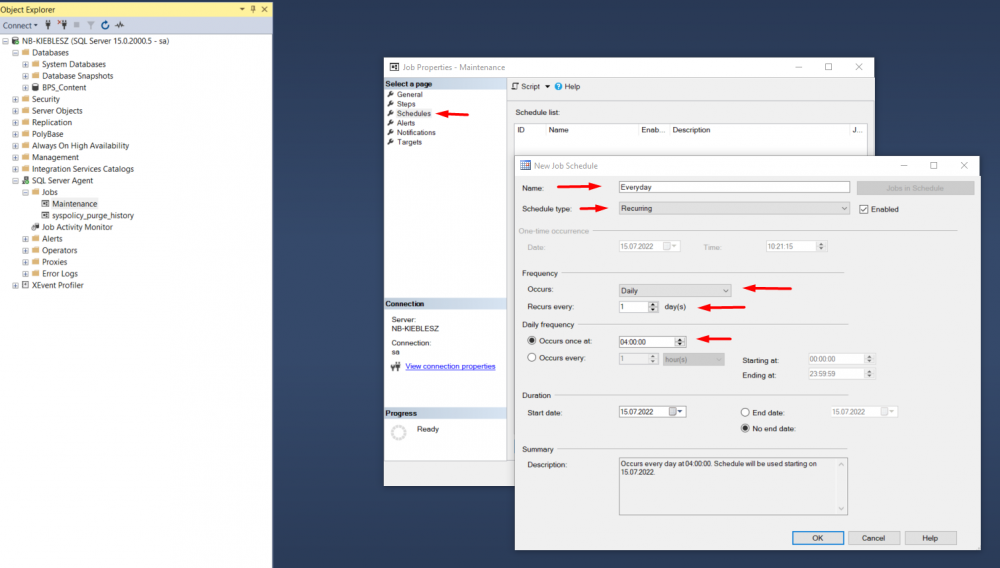

Schedules

Create a suitable maintenance schedule – ideally, the maintenance should take place outside business hours.

Confirm the changes made by selecting OK.

Effect of other settings on SQL engine performance

Apart from Maintenance, the performance of the SQL engine will also be affected by other SQL instance settings, e.g. the MaxDOP parameter, which (according to Microsoft) should be set according to the SQL Server version, the number of NUMA nodes and the number of logical processors, as specified in the tables in the article: Configure the max degree of parallelism (server configuration option) - SQL Server | Microsoft Learn .

Cost Threshold for Parallelism – the default value of ‘5’ may be too low. In this case, the recommendations vary, and according to one of them, the value of the parameter for parallel execution of the maintenance plan should not be lower than 50. Microsoft does not give official guidelines in this regard and indicates that the parameter should be adjusted to a specific SQL instance after testing different configurations.

The number of mdf/ndf files of the TempDB system database should also match the number of VPCUs (max. 8). These are the SQL instance settings, but keep in mind that the engine performance is affected by the physical parameters of the SQL machine – the speed of disks (IOPS) or processors, as well as RAM.

Database integrity and backup

Ola Hallengren also describes solutions for checking database integrity and for creating backups.

The database administrator should independently take care of full, differential backups, and the log, so that in emergency situations it is possible to restore the database, regardless of the time of failure.

More information on backup is available at:

https://docs.microsoft.com/en-us/sql/t-sql/statements/backup-transact-sql?view=sql-server-ver16

The administrator should also verify the database integrity (checkdb) in order to early detect the so-called ‘corruption’ of the database:

Restoring the database from a corrupted backup will not be possible.