Hi,

We have a project in Webcon that has many processes and dictionaries (more than 150). The application has been in production for, let's say, two years. In the first year, the database size was manageable, but now it's getting out of hand.

Does anyone have any tips, tricks, or suggestions for dealing with large amounts of data?

Are there optimization techniques we can employ?

Any opinions are welcome! Thanks!

How to handle a big content database?

Hi Robert,

are you running BPS 2023 R2?

If this is not the case it may help you to some degree. From the change log:

Resolved the issue of the AutomationSessionExecutions table growing more

than expected.

Introduced a series of improvements which aim to limit the size of this table,

e.g.:

- deleting/archiving elements will remove all connected records

in AutomationSessionExecutions,

- reduced the number of data stored in JSON by over half,

- replaced two indexes in AutomationSessionExecutions with one.

Best regards,

Daniel

Hi Robert,

are you running BPS 2023 R2?

If this is not the case it may help you to some degree. From the change log:

Resolved the issue of the AutomationSessionExecutions table growing more

than expected.

Introduced a series of improvements which aim to limit the size of this table,

e.g.:

- deleting/archiving elements will remove all connected records

in AutomationSessionExecutions,

- reduced the number of data stored in JSON by over half,

- replaced two indexes in AutomationSessionExecutions with one.

Best regards,

Daniel

I run on 2022.1.4.248 and this versions also has that problem fixed, without the fix the content database would be like 500GB in total, so it helped a bit when I upgraded the version a few months ago but 250GB of content still remains :)

I run on 2022.1.4.248 and this versions also has that problem fixed, without the fix the content database would be like 500GB in total, so it helped a bit when I upgraded the version a few months ago but 250GB of content still remains :)

I'm not aware of the changes in the "older" versions, as we need to keep up. Even so I should have remembered, that these versions also receive updates.

Database sizes are not my area of expertise. So I can offer only general tips. Some of which no longer are an option.

1. Separate attachment databases

You can create multiple attachment databases. A process can be mapped to one attachment database. After the first attachment has been added it's no longer possible to change this setting using the Designer Studio. I have no idea how this can be achieved afterwards. I would need this as I have forgotten to change it during an import of a new application.

2. Helper processes ?

Do you have any "helper" processes which only run in the background to achieve some things? You could triggere a deletion of them either immediately or after some time.

3. Timeouts / Versions

Timeouts may create new versions indefinitely. You could check for a high version number and see if a timeout is the cause. It may be an option to switch to a cyclical action.

https://docs.webcon.com/docs/2023R2/Studio/Workflow/Workflow_GlobalAction3/module_2_4_7_4_2

It's great that there's now an online documentation. :)

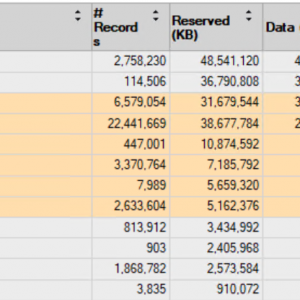

4. SolrIIndexerQueue table

Do you have any issues with SOLR? You have about 450k elements but 3.300k queued items. In my opinion this table should contain a low number of items at most.

5. Archive Database

It could be an option to move no longer actively accessed elements to the archive. I'm not sure whether this applies in your case as you have been in production for about two years.

6. Split the database

I've no idea whether this is a good approach but you could "move" the processes which create a lot of data to a "new" database. The "movement" would be copying the database, removing the privileges on the applications so that the users don't see them twice and delete the data and applications in one of them. Afterwards you would need trigger the index of the databases for SOLR.

If you have any external systems referencing the data this can cause a problem. As the DB id in the URL would change this may also break links.

During this you could move the databases to different hard drive volumes if this is an issue.

That's all I can think of at the moment.

Best regards,

Daniel

I'm not aware of the changes in the "older" versions, as we need to keep up. Even so I should have remembered, that these versions also receive updates.

Database sizes are not my area of expertise. So I can offer only general tips. Some of which no longer are an option.

1. Separate attachment databases

You can create multiple attachment databases. A process can be mapped to one attachment database. After the first attachment has been added it's no longer possible to change this setting using the Designer Studio. I have no idea how this can be achieved afterwards. I would need this as I have forgotten to change it during an import of a new application.

2. Helper processes ?

Do you have any "helper" processes which only run in the background to achieve some things? You could triggere a deletion of them either immediately or after some time.

3. Timeouts / Versions

Timeouts may create new versions indefinitely. You could check for a high version number and see if a timeout is the cause. It may be an option to switch to a cyclical action.

https://docs.webcon.com/docs/2023R2/Studio/Workflow/Workflow_GlobalAction3/module_2_4_7_4_2

It's great that there's now an online documentation. :)

4. SolrIIndexerQueue table

Do you have any issues with SOLR? You have about 450k elements but 3.300k queued items. In my opinion this table should contain a low number of items at most.

5. Archive Database

It could be an option to move no longer actively accessed elements to the archive. I'm not sure whether this applies in your case as you have been in production for about two years.

6. Split the database

I've no idea whether this is a good approach but you could "move" the processes which create a lot of data to a "new" database. The "movement" would be copying the database, removing the privileges on the applications so that the users don't see them twice and delete the data and applications in one of them. Afterwards you would need trigger the index of the databases for SOLR.

If you have any external systems referencing the data this can cause a problem. As the DB id in the URL would change this may also break links.

During this you could move the databases to different hard drive volumes if this is an issue.

That's all I can think of at the moment.

Best regards,

Daniel

Hi Daniel.

Transferring attachments to another or a new database is a straightforward process. Here are the steps to achieve this:

1. Create a new database specifically for storing attachments associated with the process.

2. Update the DEF_AttachmentsDatabase entry in the WFDefinitions table for the desired process. Enter the name of the newly created attachment database.

3. Restart the ISS/Pool app and service.

4. Transfer the data from the old database containing process attachments to the newly created database. Exclude the columns [ATF_ID] and [ATF_RowVersion].

5. Verify if everything is OK.

6. Delete the attachments from the table in the old database.

Ensure you have performed appropriate backups before proceeding with these steps.

If your process uses multiple attachment databases, adjust the values in DEF_AttachmentsDatabaseColName and DEF_AttachmentsDatabaseWFCONID from WFDefinitions accordingly (in this scenario, DEF_AttachmentsDatabase , will be null). Update them based on the value specified in the attribute of the element defining the attachment database for your process.

Regards.

Hi Daniel.

Transferring attachments to another or a new database is a straightforward process. Here are the steps to achieve this:

1. Create a new database specifically for storing attachments associated with the process.

2. Update the DEF_AttachmentsDatabase entry in the WFDefinitions table for the desired process. Enter the name of the newly created attachment database.

3. Restart the ISS/Pool app and service.

4. Transfer the data from the old database containing process attachments to the newly created database. Exclude the columns [ATF_ID] and [ATF_RowVersion].

5. Verify if everything is OK.

6. Delete the attachments from the table in the old database.

Ensure you have performed appropriate backups before proceeding with these steps.

If your process uses multiple attachment databases, adjust the values in DEF_AttachmentsDatabaseColName and DEF_AttachmentsDatabaseWFCONID from WFDefinitions accordingly (in this scenario, DEF_AttachmentsDatabase , will be null). Update them based on the value specified in the attribute of the element defining the attachment database for your process.

Regards.

HI Koral,

just to make it clear for everyone who reads this.

If I'm not mistaken, all the steps are handled directly on the database level.

Therefore, this is not really a supported operation but achievable.

Did I understand this correctly?

Best regards,

Daniel

HI Koral,

just to make it clear for everyone who reads this.

If I'm not mistaken, all the steps are handled directly on the database level.

Therefore, this is not really a supported operation but achievable.

Did I understand this correctly?

Best regards,

Daniel

HI

yes, these are operations performed directly on the database.

Regards.

HI

yes, these are operations performed directly on the database.

Regards.

I'm also curious how to optimised proceses.

Our database is 10 times larger than Yours (and we did it in one year).

For our purposes every ms does matter.

There is little to none info about efficiency of certain actions.