Hi everyone,

I'm wondering what would be the best approach for the following use case.

There may be between 300 and 500 active claim workflow instances. The costs for these claims are tracked in the ERP system. The total of the costs needs to be in the claim, so that it can be used in reports.

Challenges:

1. I can only use REST for retrieving the costs

2. The ERP system supports webhooks, which doesn't help us.

3. The total changes only rarely, I don't want to create a new version for an instance on a daily basis if nothing changes.

What are your ideas for solving this?

My ideas up to this point:

1. Update workflow with item list

I wanted to:

- Create an item list with all active workflow instance

- Fetch the data from the ERP system for each row

- Compare the old against the new data

- Trigger an update if something changed

Problem: Executing xxxx REST Requests in a "for each" loop in an item list will probably exceed the timeout of path transition.

There's no option to filter the item list in the "for each" loop. Using a "any collection" source for the for each would work, but than I can't update the item list row.

I could use some if condition in the item list to execute only a part of the item list. This would work technically but I would still get a log entry in the history for each item in the item list. This will bloat the database as I would have to repeat this path until instance was handled.

2. Update workflow with item list and sub workflows

Instead of updating the data in the item list, I will use the item list to spawn sub workflows for each row. The sub workflow would than fetch the data for it's claim, compare it and do the update if necessary.

This would result again in xxxx REST requests, but at least they are scoped to their own workflow instance and shouldn't run into a timeout. If some requests fail, because there are to many. Maybe I can check here also that there are not more than xx Workflows in the "fetch data" step to reduce the amount of request send to the ERP system.

Overall problem: This is running on webconapps in a multitenant environment -> I don't have the archive action, so I can't delete these helper workflows.

Best regards,

Daniel

Periodically updating data via rest

Hi,

If I understood the assumptions correctly,

I assume that you can create a list of elements for which you want to download data from the erp in the source for the each loop. Then, in the each loop, add the erp data from each iteration of the erp call with the insert sql action to a separate table, e.g. in a separate database (so as not to add it to the webcon database) + additional data returned in the each source, such as instance id, etc.,

Then, after the correct execution of the entire run of each, perform a comparison / update of the elements.

Regards

Hi,

If I understood the assumptions correctly,

I assume that you can create a list of elements for which you want to download data from the erp in the source for the each loop. Then, in the each loop, add the erp data from each iteration of the erp call with the insert sql action to a separate table, e.g. in a separate database (so as not to add it to the webcon database) + additional data returned in the each source, such as instance id, etc.,

Then, after the correct execution of the entire run of each, perform a comparison / update of the elements.

Regards

Hi Karol,

you have understood it correctly. I would like to use the idea as this would allow me to use a "filtered foreach loop" using "any collection".

In my case it's not an option. I don't have another database I can write to nor can I create one.

Best regards,

Daniel

Hi Karol,

you have understood it correctly. I would like to use the idea as this would allow me to use a "filtered foreach loop" using "any collection".

In my case it's not an option. I don't have another database I can write to nor can I create one.

Best regards,

Daniel

Maybe create a temporary table at the beginning and then drop after doing the operation? It's just a theory, but I don't know what your exact rights/restrictions are either.

Regards.

Hi Daniel, Karol

I thought about that temporary table, and it might cause troubles, as they are dropped at the end of session. Here comes the question how does Webcon handles sql sessions. Also I'm not sure if in context of bps_user you will be able to create such table. Although I really like the idea of some additional `storage`.

Maybe a way with PowerShell Script?

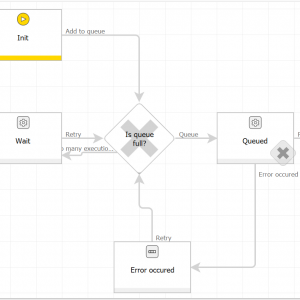

Create 2 step workflow, with cyclic action on 2nd step executed every x time.

Put the logic in the script:

1. Take comma separated identifiers as array, or mayble json array of id + total cost + wfd_id (should be possible with sql buisness rule).

2. Loop through all those ids, and request data from erp.

3. Inside the loop compare values, if they chaged, then put id + new value in new array.

4. Loop through chaged ids, and update workflows using WEBCON REST.

I'm not sure how well will it do in terms of speed/timeouts, but i'll look for a way to split it into batches if needed.

It's not great, because scripting is not really low-code, but it should make it work :)

Hi Daniel, Karol

I thought about that temporary table, and it might cause troubles, as they are dropped at the end of session. Here comes the question how does Webcon handles sql sessions. Also I'm not sure if in context of bps_user you will be able to create such table. Although I really like the idea of some additional `storage`.

Maybe a way with PowerShell Script?

Create 2 step workflow, with cyclic action on 2nd step executed every x time.

Put the logic in the script:

1. Take comma separated identifiers as array, or mayble json array of id + total cost + wfd_id (should be possible with sql buisness rule).

2. Loop through all those ids, and request data from erp.

3. Inside the loop compare values, if they chaged, then put id + new value in new array.

4. Loop through chaged ids, and update workflows using WEBCON REST.

I'm not sure how well will it do in terms of speed/timeouts, but i'll look for a way to split it into batches if needed.

It's not great, because scripting is not really low-code, but it should make it work :)

Hi Karol, hi Maks,

I'm running on WEBCONAPPS, so SQL and PowerShell actions are not available.

What I could do is a slight modification of Maks idea of the csv.

Instead of a csv file I could use multiline text fields to store the concatenated values, of the DET_ID, and totals.

Outside the foreach loop I could use a "Change item list value" to extract the data and update the item list

Could be better than spawning a whole bunch of sub-workflows.

Thanks for your ideas. :)

@Maks

You don't need to look into splitting it up in batches. I will use an "action" column, which defines which rows needs to be processed next. The action will then be modified and I can reuse it.

Best regards,

Daniel

Hi Karol, hi Maks,

I'm running on WEBCONAPPS, so SQL and PowerShell actions are not available.

What I could do is a slight modification of Maks idea of the csv.

Instead of a csv file I could use multiline text fields to store the concatenated values, of the DET_ID, and totals.

Outside the foreach loop I could use a "Change item list value" to extract the data and update the item list

Could be better than spawning a whole bunch of sub-workflows.

Thanks for your ideas. :)

@Maks

You don't need to look into splitting it up in batches. I will use an "action" column, which defines which rows needs to be processed next. The action will then be modified and I can reuse it.

Best regards,

Daniel

Hi Daniel,

Another approach could be to use an automation on timeout. Then in automation use a REST action which will save the result in a local parameter and the form validation action which will check if anything has changed. If nothing has changed it will return false and stop the automation. The last step will be to assign the data from the parameter to the form.

With this approach, each document will only care about its own data and you won't need to store additional information anywhere. Validation failures will not count towards timeout execution errors and will not update document version. The only downside that comes to my mind is that every timeout execution will be logged in the history.

Hi Daniel,

Another approach could be to use an automation on timeout. Then in automation use a REST action which will save the result in a local parameter and the form validation action which will check if anything has changed. If nothing has changed it will return false and stop the automation. The last step will be to assign the data from the parameter to the form.

With this approach, each document will only care about its own data and you won't need to store additional information anywhere. Validation failures will not count towards timeout execution errors and will not update document version. The only downside that comes to my mind is that every timeout execution will be logged in the history.

Hi Kamil,

yes, using a timeout would be an option if the workflow instance would be short lived. In my case they may be there for months or even longer. Using the timeout would create a new version each time as you mentioned.

That's not something I want to have. :)

Best regards,

Daniel

Hi Kamil,

yes, using a timeout would be an option if the workflow instance would be short lived. In my case they may be there for months or even longer. Using the timeout would create a new version each time as you mentioned.

That's not something I want to have. :)

Best regards,

Daniel

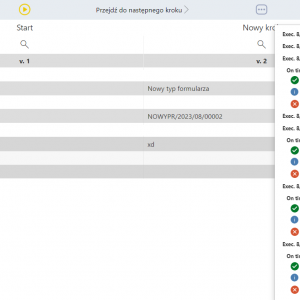

The timeout itself will not create a new version of the document, only updating the data will change the version. If, as a result of validation, it turns out that the document does not require changes, the automation will be canceled, the document will not be saved and its version will not be updated. Also, saving logs to the history does not affect the version of the document. I have attached a screenshot of the history of the document. All action calls were made and logged for the same version of the element.

The timeout itself will not create a new version of the document, only updating the data will change the version. If, as a result of validation, it turns out that the document does not require changes, the automation will be canceled, the document will not be saved and its version will not be updated. Also, saving logs to the history does not affect the version of the document. I have attached a screenshot of the history of the document. All action calls were made and logged for the same version of the element.

Hi Kamil,

thanks for the reminder. I tend to use primarily the "path transition" option, so I automatically get a new version. Using the operation mode "only action" would make a difference.

@All

Thanks for your input and I'm sorry about my delayed replies. There's always something interrupting me and changing my priorities. I'm still working on it. :)

In the end I will have to got with the sub workflow option.

The reason for this is the following.

The REST API doesn't return a total for a claim but a list of all entries. I have to generate a URL with filter information and generate a total myself. For this I have two options

a) I can either use a REST SDK storing the result in an item list, calculating the sum about everything and storing it in a field myself.

b) I use the Sum function for "Data source value" and store the result in a field. The filter can be generated by accessing the form fields in the specialized data source.

In context of the bigger picture, that I need to do option a xxx times, I prefer the second option. :)

Best regards,

Daniel